In S3 simplicity is table stakes

A few months ago at re:Invent, I spoke about Simplexity – how systems that start simple often become complex over time as they address customer feedback, fix bugs, and add features. At Amazon, we’ve spent decades working to abstract away engineering complexities so our builders can focus on what matters most: their unique business logic. There’s perhaps no better example of this journey than S3.

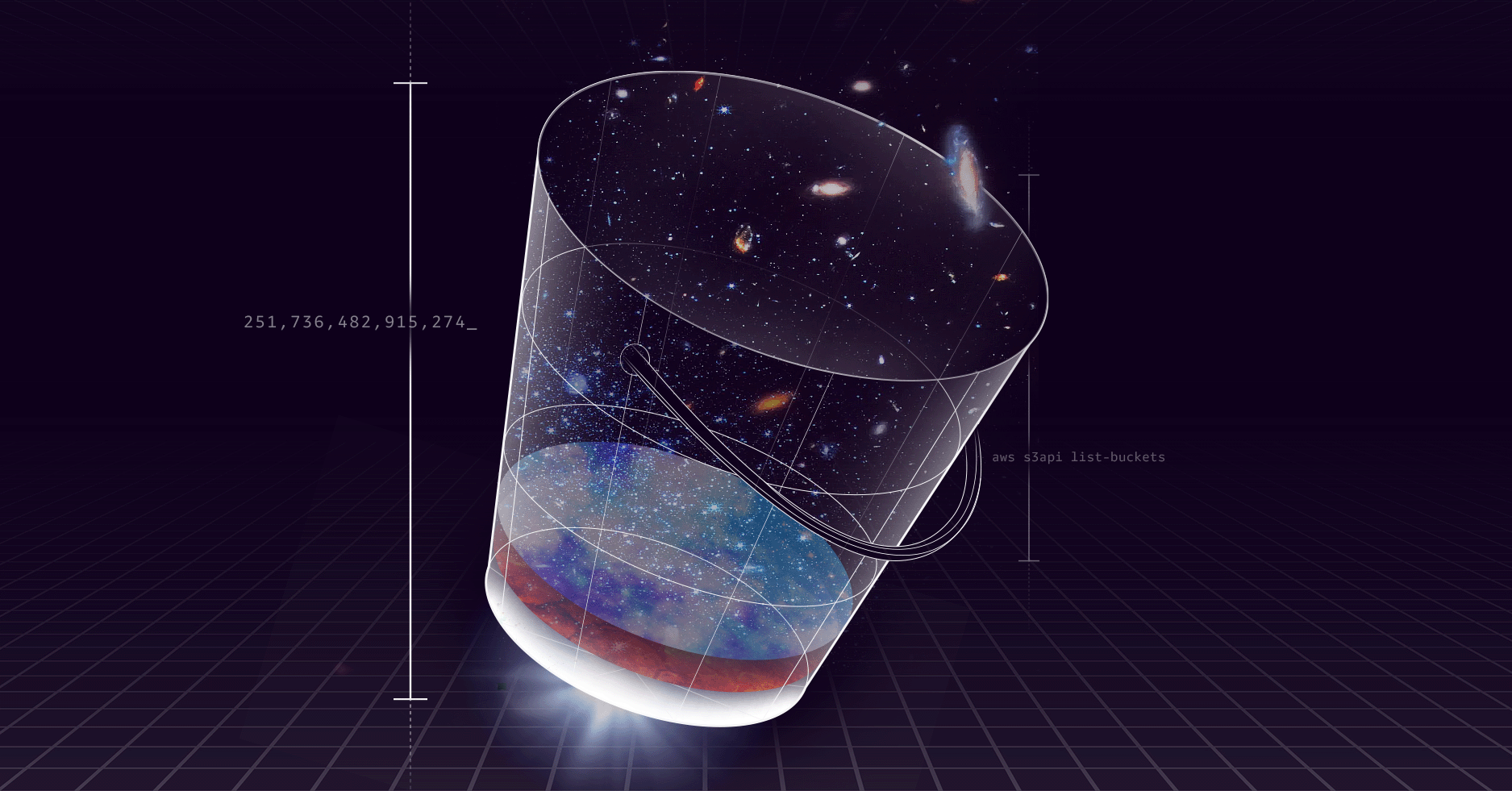

Today, on Pi Day (S3’s 19th birthday), I’m sharing a post from Andy Warfield, VP and Distinguished Engineer of S3. Andy takes us through S3’s evolution from simple object store to sophisticated data solution, illustrating how customer feedback has shaped every aspect of the service. It’s a fascinating look at how we maintain simplicity even as systems scale to handle hundreds of trillions of objects.

I hope you enjoy reading this as much as I did.

–W

In S3 simplicity is table stakes

On March 14, 2006, NASA’s Mars Reconnaissance Orbiter successfully entered Martian orbit after a seven-month journey from Earth, the Linux kernel 2.6.16 was released, I was getting ready for a job interview, and S3 launched as the first public AWS service.

It’s funny to reflect on a moment in time as a way of stepping back and thinking about how things have changed: The job interview was at the University of Toronto, one of about ten University interviews that I was travelling to as I finished my PhD and set out to be a professor. I’d spent the previous four years living in Cambridge, UK, working on hypervisors, storage and I/O virtualization, technologies that would all wind up being used a lot in building the cloud. But on that day, as I approached the end of grad school and the beginning of having a family and a career, the very first external customer objects were starting to land in S3.

By the time that I joined the S3 team, in 2017, S3 had just crossed a trillion objects. Today, S3 has hundreds of trillions of objects stored across 36 regions globally and it is used as primary storage by customers in pretty much every industry and application domain on earth. Today is Pi Day — and S3 turns 19. In it’s almost two decades of operation, S3 has grown into what’s got to be one of the most interesting distributed systems on Earth. In the time I’ve worked on the team, I’ve come to view the software we build, the organization that builds it, and the product expectations that a customer has of S3 as inseparable. Across these three aspects, S3 emerges as a sort of organism that continues to evolve and improve, and to learn from the developers that build on top of it.

Listening (and responding) to our builders

When I started at Amazon almost 8 years ago, I knew that S3 was used by all sorts of applications and services that I used every day. I had seen discussions, blog posts, and even research papers about building on S3 from companies like Netflix, Pinterest, Smugmug, and Snowflake. The thing that I really didn’t appreciate was the degree to which our engineering teams spend time talking to the engineers of customers who build using S3, and how much influence external builders have over the features that we prioritize. Almost everything we do, and certainly all of the most popular features that we’ve launched, have been in direct response to requests from S3 customers. The past year has seen some really interesting feature launches for S3 — things like S3 Tables, which I’ll talk about more in a sec — but to me, and I think to the team overall, some of our most rewarding launches have been things like consistency, conditional operations and increasing per-account bucket limits. These things really matter because they remove limits and actually make S3 simpler.

This idea of being simple is really important, and it’s a place where our thinking has evolved over almost two decades of building and operating S3. A lot of people associate the term simple with the API itself — that an HTTP-based storage system for immutable objects with four core verbs (PUT, GET, DELETE and LIST) is a pretty simple thing to wrap your head around. But looking at how our API has evolved in response to the huge range of things that builders do over S3 today, I’m not sure this is the aspect of S3 that we’d really use “simple” to describe. Instead, we’ve come to think about making S3 simple as something that turns out to be a much trickier problem — we want S3 to be about working with your data and not having to think about anything other than that. When we have aspects of the system that require extra work from developers, the lack of simplicity is distracting and time consuming for them. In a storage service, these distractions take many forms — probably the most central aspect of S3’s simplicity is elasticity. On S3, you never have to do up front provisioning of capacity or performance, and you don’t worry about running out of space. There is a lot of work that goes into the properties that developers take for granted: elastic scale, very high durability, and availability, and we are successful only when these things can be taken for granted, because it means they aren’t distractions.

When we moved S3 to a strong consistency model, the customer reception was stronger than any of us expected (and I think we thought people would be pretty darned pleased!). We knew it would be popular, but in meeting after meeting, builders spoke about deleting code and simplifying their systems. In the past year, as we’ve started to roll out conditional operations we’ve had a very similar reaction.

One of my favorite things in my role as an engineer on the S3 team is having the opportunity to learn about the systems that our customers build. I especially love learning about startups that are building databases, file systems, and other infrastructure services directly on S3, because it’s often these customers who experience early growth in an interesting new domain and have insightful opinions on how we can improve. These customers are also some of our most eager consumers (although certainly not the only eager consumers) of new S3 features as soon as they ship. I was recently chatting with Simon Hørup Eskildsen, the CEO of Turbopuffer — which is a really nicely designed serverless vector database built on top of S3 — and he mentioned that he has a script that monitors and sends him notifications about S3 “What’s new” posts on an hourly basis. I’ve seen other examples where customers guess at new APIs they hope that S3 will launch, and have scripts that run in the background probing them for years! When we launch new features that introduce new REST verbs, we typically have a dashboard to report the call frequency of requests to it, and it’s often the case that the team is surprised that the dashboard starts posting traffic as soon as it’s up, even before the feature launches, and they discover that it’s exactly these customer probes, guessing at a new feature.

The bucket limit announcement that we made at re:Invent last year is a similar example of an unglamorous launch that builders get excited about. Historically, there has been a limit of 100 buckets per account in S3, which in retrospect is a little weird. We focused like crazy on scaling object and capacity count, with no limits on the number of objects or capacity of a single bucket, but never really worried about customers scaling to large numbers of buckets. In recent years though, customers started to call this out as a sharp edge, and we started to notice an interesting difference between how people think about buckets and objects. Objects are a programmatic construct: often being created, accessed, and eventually deleted entirely by other software. But the low limit on the total number of buckets made them a very human construct: it was typically a human who would create a bucket in the console or at the CLI, and it was often a human who kept track of all the buckets that were in use in an organization. What customers were telling us was that they loved the bucket abstraction as a way of grouping objects, associating things like security policy with them, and then treating them as collections of data. In many cases, our customers wanted to use buckets as a way to share data sets with their own customers. They wanted buckets to become a programmatic construct.

So we got together and did the work to scale bucket limits, and it’s a interesting example of how our limits and sharp edges aren’t just a thing that can frustrate customers, but can also be really tricky to unwind at scale. In S3, the bucket metadata system works differently from the much larger namespace that tracks object metadata in S3. That system, which we call “Metabucket” has already been rewritten for scale, even with the 100 bucket per account limit, more than once in the past. There was obvious work required to scale Metabucket further, in anticipation of customers creating millions of buckets per account. But there were more subtle aspects of addressing this scale: we had to think hard about the impact of larger numbers of bucket names, the security consequences of programmatic bucket creation in application design, and even performance and UI concerns. One interesting example is that there are many places in the AWS console where other services will pop up a widget that allows a customer to browse their S3 buckets. Athena, for example, will do this to allow you to specify a location for query results. There are a few forms of this widget, depending on the use case, and they populate themselves by listing all the buckets in an account, and then often by calling HeadBucket on each individual bucket to collect additional metadata. As the team started to look at scaling, they created a test account with an enormous number of buckets and started to test rendering times in the AWS Console — and in several places, rendering the list of S3 buckets could take tens of minutes to complete. As we looked more broadly at user experience for bucket scaling, we had to work across tens of services on this rendering issue. We also introduced a new paged version of the ListBuckets API call, and introduced a limit of 10K buckets until a customer opted in to a higher resource limit so that we had a guardrail against causing them the same type of problem that we’d seen in console rendering. Even after launch, the team carefully tracked customer behaviour on ListBuckets calls so that we could proactively reach out if we thought the new limit was having an unexpected impact.

Performance matters

Over the years, as S3 has evolved from a system primarily used for archival data over relatively slow internet links into something far more capable, customers naturally wanted to do more and more with their data. This created a fascinating flywheel where improvements in performance drove demand for even more performance, and any limitations became yet another source of friction that distracted developers from their core work.

Our approach to performance ended up mirroring our philosophy about capacity – it needed to be fully elastic. We decided that any customer should be entitled to use the entire performance capability of S3, as long as it didn’t interfere with others. This pushed us in two important directions: first, to think proactively about helping customers drive massive performance from their data without imposing complexities like provisioning, and second, to build sophisticated automations and guardrails that let customers push hard while still playing well with others. We started by being transparent about S3’s design, documenting everything from request parallelization to retry strategies, and then built these best practices into our Common Runtime (CRT) library. Today, we see individual GPU instances using the CRT to drive hundreds of gigabits per second in and out of S3.

While much of our initial focus was on throughput, customers increasingly asked for their data to be quicker to access too. This led us to launch S3 Express One Zone in 2023, our first SSD storage class, which we designed as a single-AZ offering to minimize latency. The appetite for performance continues to grow – we have machine learning customers like Anthropic driving tens of terabytes per second, while entertainment companies stream media directly from S3. If anything, I expect this trend to accelerate as customers pull the experience of using S3 closer to their applications and ask us to support increasingly interactive workloads. It’s another example of how removing limitations – in this case, performance constraints – lets developers focus on building rather than working around sharp edges.

The tension between simplicity and velocity

The pursuit of simplicity has taken us in all sorts of interesting directions over the past two decades. There are all the examples that I mentioned above, from scaling bucket limits to enhancing performance, as well as countless other improvements especially around features like cross-region replication, object lock, and versioning that all provide very deliberate guardrails for data protection and durability. With the rich history of S3’s evolution, it’s easy to work through a long list of features and improvements and talk about how each one is an example of making it simpler to work with your objects.

But now I’d like to make a bit of a self-critical observation about simplicity: in pretty much every example that I’ve mentioned so far, the improvements that we make toward simplicity are really improvements against an initial feature that wasn’t simple enough. Putting that another way, we launch things that need, over time, to become simpler. Sometimes we are aware of the gaps and sometimes we learn about them later. The thing that I want to point to here is that there’s actually a really important tension between simplicity and velocity, and it’s a tension that kind of runs both ways. On one hand, the pursuit of simplicity is a bit of a “chasing perfection” thing, in that you can never get all the way there, and so there’s a risk of over-designing and second-guessing in ways that prevent you from ever shipping anything. But on the other hand, racing to release something with painful gaps can frustrate early customers and worse, it can put you in a spot where you have backloaded work that is more expensive to simplify it later. This tension between simplicity and velocity has been the source of some of the most heated product discussions that I’ve seen in S3, and it’s a thing that I feel the team actually does a pretty deliberate job of. But it’s a place where when you focus your attention you are never satisfied, because you invariably feel like you are either moving too slowly or not holding a high enough bar. To me, this paradox perfectly characterizes the angst that we feel as a team on every single product release.

S3 Tables: Everything is an object, but objects aren’t everything

People have been storing tables in S3 for over a decade. The Apache Parquet format was launched in 2013 as a way to efficiently represent tabular data, and it’s become a de facto representation for all sorts of datasets in S3, and a basis for millions of data lakes. S3 stores exabytes of parquet data and serves hundreds of petabytes of Parquet data every day. Over time, parquet evolved to support connectors for popular analytics tools like Apache Hadoop and Spark, and integrations with Hive to allow large numbers of parquet files to be combined into a single table.

The more popular that parquet became, and the more that analytics workloads evolved to work with parquet-based tables, the more that the sharp edges of working with parquet stood out. Developers loved being able to build data lakes over parquet, but they wanted a richer table abstraction: something that supports finer-grained mutations, like inserting or updating individual rows, as well as evolving table schemas by adding or removing new columns, and this was difficult to achieve, especially over immutable object storage. In 2017, the Apache Iceberg project initially launched in order to define a richer table abstraction above parquet.

Objects are simple and immutable, but tables are neither. So Iceberg introduced a metadata layer, and an approach to organizing tabular data that really innovated to build a table construct that could be composed from S3 objects. It represents a table as a series of snapshot-based updates, where each snapshot summarizes a collection of mutations from the last version of the table. The result of this approach is that small updates don’t require that the whole table be rewritten, and also that the table is effectively versioned. It’s easy to step forward and backward in time and review old states, and the snapshots lend themselves to the transactional mutations that databases need to update many items atomically.

Iceberg and other open table formats like it are effectively storage systems in their own right, but because their structure is externalized – customer code manages the relationship between iceberg data and metadata objects, and performs tasks like garbage collection – some challenges emerge. One is the fact that small snapshot-based updates have a tendency to produce a lot of fragmentation that can hurt table performance, and so it’s necessary to compact and garbage collect tables in order to clean up this fragmentation, reclaim deleted space, and help performance. The other complexity is that because these tables are actually made up of many, frequently thousands, of objects, and are accessed with very application-specific patterns, that many existing S3 features, like Intelligent-Tiering and cross-region replication, don’t work exactly as expected on them.

As we talked to customers who had started operating highly-scaled, often multi-petabyte databases over Iceberg, we heard a mix of enthusiasm about the richer set of capabilities of interacting with a table data type instead of an object data type. But we also heard frustrations and tough lessons from the fact that customer code was responsible for things like compaction, garbage collection, and tiering — all things that we do internally for objects. These sophisticated Iceberg customers pointed out, pretty starkly, that with Iceberg what they were really doing was building their own table primitive over S3 objects, and they asked us why S3 wasn’t able to do more of the work to make that experience simple. This was the voice that led us to really start exploring a first-class table abstraction in S3, and that ultimately led to our launch of S3 Tables.

The work to build tables hasn’t just been about offering a “managed Iceberg” product on top of S3. Tables are among the most popular data types on S3, and unlike video, images, or PDFs, they involve a complex cross-object structure and the need support conditional operations, background maintenance, and integrations with other storage-level features. So, in deciding to launch S3 Tables, we were excited about Iceberg as an OTF and the way that it implemented a table abstraction over S3, but we wanted to approach that abstraction as if it was a first-class S3 construct, just like an object. The tables that we launched at re:Invent in 2024 really integrate Iceberg with S3 in a few ways: first of all, each table surfaces behind its own endpoint and is a resource from a policy perspective – this makes it much easier to control and share access by setting policy on the table itself and not on the individual objects that it is composed of. Second, we built APIs to help simplify table creation and snapshot commit operations. And third, by understanding how Iceberg laid out objects we were able to internally make performance optimizations to improve performance.

We knew that we were making a simplicity versus velocity decision. We had demonstrated to ourselves and to preview customers that S3 Tables were an improvement relative to customer-managed Iceberg in S3, but we also knew that we had a lot of simplification and improvement left to do. In the 14 weeks since they launched, it’s been great to see this velocity take shape as Tables have introduced full support for the Iceberg REST Catalog (IRC) API, and the ability to query directly in the console. But we still have plenty of work left to do.

Historically, we’ve always talked about S3 as an object store and then gone on to talk about all of the properties of objects — security, elasticity, availability, durability, performance — that we work to deliver in the object API. I think one thing that we’ve learned from the work on Tables is that it’s these properties of storage that really define S3 much more than the object API itself.

There was a consistent reaction from customers that the abstraction resonated with them – that it was intuitively, “all the things that S3 is for objects, but for a table.” We need to work to make sure that Tables match this expectation. That they are just as much of a simple, universal, developer-facing primitive as objects themselves.

By working to really generalize the table abstraction on S3, I hope we’ve built a bridge between analytics engines and the much broader set of general application data that’s out there. We’ve invested in a collaboration with DuckDB to accelerate Iceberg support in Duck, and I expect that we will focus a lot on other opportunities to really simplify the bridge between developers and tabular data, like the many applications that store internal data in tabular formats, often embedding library-style databases like SQLite. My sense is that we’ll know we’ve been successful with S3 Tables when we start seeing customers move back and forth with the same data for both direct analytics use from tools like spark, and for direct interaction with their own applications, and data ingestion pipelines.

Looking ahead

As S3 approaches the end of its second decade, I’m struck by how fundamentally our understanding of what S3 is has evolved. Our customers have consistently pushed us to reimagine what’s possible, from scaling to handle hundreds of trillions of objects to introducing entirely new data types like S3 Tables.

Today, on Pi Day, S3’s 19th birthday, I hope what you see is a team that remains deeply excited and invested in the system we’re building. As we look to the future, I’m excited knowing that our builders will keep finding novel ways to push the boundaries of what storage can be. The story of S3’s evolution is far from over, and I can’t wait to see where our customers take us next. Meanwhile, we’ll continue as a team on building storage that you can take for granted.

As Werner would say: “Now, go build!”