Introducing Distill CLI: An efficient, Rust-powered tool for media summarization

A few weeks ago, I wrote about a project our team has been working on called Distill. A simple application that summarizes and extracts important details from our daily meetings. At the end of that post, I promised you a CLI version written in Rust. After a few code reviews from Rustaceans at Amazon and a bit of polish, today, I’m ready to share the Distill CLI.

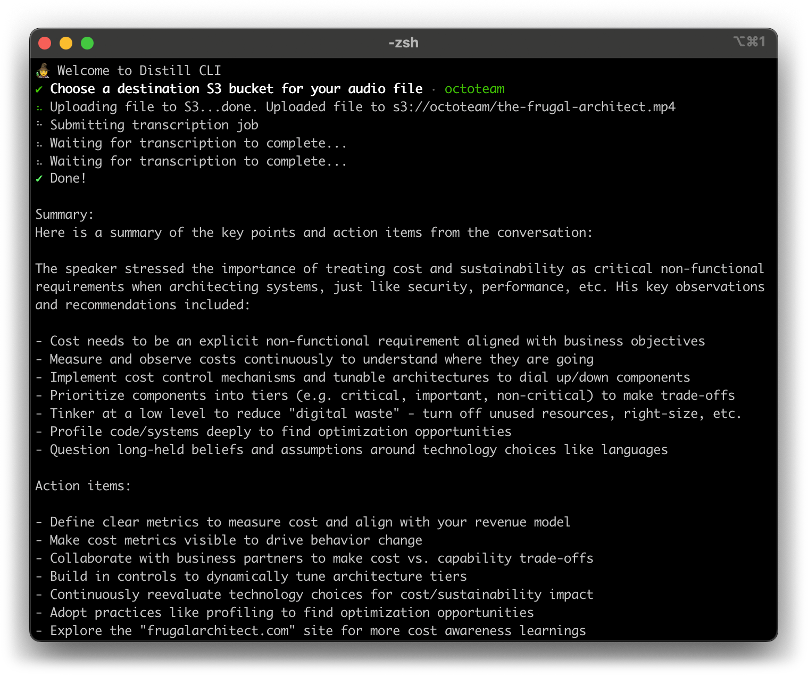

After you build from source, simply pass Distill CLI a media file and select the S3 bucket where you’d like to store the file. Today, Distill supports outputting summaries as Word documents, text files, and printing directly to terminal (the default). You’ll find that it’s easily extensible – my team (OCTO) is already using it to export summaries of our team meetings directly to Slack (and working on support for Markdown).

Tinkering is a good way to learn and be curious

The way we build has changed quite a bit since I started working with distributed systems. Today, if you want it, compute, storage, databases, networking are available on demand. As builders, our focus has shifted to faster and faster innovation, and along the way tinkering at the system level has become a bit of a lost art. But tinkering is as important now as it has ever been. I vividly remember the hours spent fiddling with BSD 2.8 to make it work on PDP-11s, and it cemented my never-ending love for OS software. Tinkering provides us with an opportunity to really get to know our systems. To experiment with new languages, frameworks, and tools. To look for efficiencies big and small. To find inspiration. And this is exactly what happened with Distill.

We rewrote one of our Lambda functions in Rust, and observed that cold starts were 12x faster and the memory footprint decreased by 73%. Before I knew it, I began to think about other ways I could make the entire process more efficient for my use case.

The original proof of concept stored media files, transcripts, and summaries in S3, but since I’m running the CLI locally, I realized I could store the transcripts and summaries in memory and save myself a few writes to S3. I also wanted an easy way to upload media and monitor the summarization process without leaving the command line, so I cobbled together a simple UI that provides status updates and lets me know when anything fails. The original showed what was possible, it left room for tinkering, and it was the blueprint that I used to write the Distill CLI in Rust.

I encourage you to give it a try, and let me know when you find any bugs, edge cases or have ideas to improve on it.

Builders are choosing Rust

As technologists, we have a responsibility to build sustainably. And this is where I really see Rust’s potential. With its emphasis on performance, memory safety and concurrency there is a real opportunity to decrease computational and maintenance costs. Its memory safety guarantees eliminate obscure bugs that plague C and C++ projects, reducing crashes without compromising performance. Its concurrency model enforces strict compile-time checks, preventing data races and maximizing multi-core processors. And while compilation errors can be bloody aggravating in the moment, fewer developers chasing bugs, and more time focused on innovation are always good things. That’s why it’s become a go-to for builders who thrive on solving problems at unprecedented scale.

Since 2018, we have increasingly leveraged Rust for critical workloads across various services like S3, EC2, DynamoDB, Lambda, Fargate, and Nitro, especially in scenarios where hardware costs are expected to dominate over time. In his guest post last year, Andy Warfield wrote a bit about ShardStore, the bottom-most layer of S3’s storage stack that manages data on each individual disk. Rust was chosen to get type safety and structured language support to help identify bugs sooner, and how they wrote libraries to extend that type safety to applications to on-disk structures. If you haven’t already, I recommend that you read the post, and the SOSP paper.

This trend is mirrored across the industry. Discord moved their Read States service from Go to Rust to address large latency spikes caused by garbage collection. It is 10x faster with their worst tail latencies reduced almost 100x. Similarly, Figma rewrote performance-sensitive parts of their multiplayer service in Rust, and they’ve seen significant server-side performance improvements, such as reducing peak average CPU usage per machine by 6x.

The point is that if you are serious about cost and sustainability, there is no reason not to consider Rust.

Rust is hard…

Rust has a reputation for being a difficult language to learn and I won’t dispute that there is a learning curve. It will take time to get familiar with the borrow checker, and you will fight with the compiler. It’s a lot like writing a PRFAQ for a new idea at Amazon. There is a lot of friction up front, which is sometimes hard when all you really want to do is jump into the IDE and start building. But once you’re on the other side, there is tremendous potential to pick up velocity. Remember, the cost to build a system, service, or application is nothing compared to the cost of operating it, so the way you build should be continually under scrutiny.

But you don’t have to take my word for it. Earlier this year, The Register published findings from Google that showed their Rust teams were twice as productive as team’s using C++, and that the same size team using Rust instead of Go was as productive with more correctness in their code. There are no bonus points for growing headcount to tackle avoidable problems.

Closing thoughts

I want to be crystal clear: this is not a call to rewrite everything in Rust. Just as monoliths are not dinosaurs, there is no single programming language to rule them all and not every application will have the same business or technical requirements. It’s about using the right tool for the right job. This means questioning the status quo, and continuously looking for ways to incrementally optimize your systems – to tinker with things and measure what happens. Something as simple as switching the library you use to serialize and deserialize json from Python’s standard library to orjson might be all you need to speed up your app, reduce your memory footprint, and lower costs in the process.

If you take nothing else away from this post, I encourage you to actively look for efficiencies in all aspects of your work. Tinker. Measure. Because everything has a cost, and cost is a pretty good proxy for a sustainable system.

Now, go build!

A special thank you to AWS Rustaceans Niko Matsakis and Grant Gurvis for their code reviews and feedback while developing the Distill CLI.