Bringing the Magic of Amazon AI and Alexa to Apps on AWS.

From the early days of Amazon, Machine learning (ML) has played a critical role in the value we bring to our customers. Around 20 years ago, we used machine learning in our recommendation engine to generate personalized recommendations for our customers. Today, there are thousands of machine learning scientists and developers applying machine learning in various places, from recommendations to fraud detection, from inventory levels to book classification to abusive review detection. There are many more application areas where we use ML extensively: search, autonomous drones, robotics in fulfillment centers, text processing and speech recognition (such as in Alexa) etc.

Among machine learning algorithms, a class of algorithms called deep learning has come to represent those algorithms that can absorb huge volumes of data and learn elegant and useful patterns within that data: faces inside photos, the meaning of a text, or the intent of a spoken word.After over 20 years of developing these machine learning and deep learning algorithms and end user services listed above, we understand the needs of both the machine learning scientist community that builds these machine learning algorithms as well as app developers who use them. We also have a great deal of machine learning technology that can benefit machine scientists and developers working outside Amazon. Last week, I wrote a blog about helping the machine learning scientist community select the right deep learning framework from among many we support on AWS such as MxNet, TensorFlow, Caffe, etc.

Today, I want to focus on helping app developers who have chosen to develop their apps on AWS and have in the past developed some of the seminal apps of our times on AWS, such as Netflix, AirBnB, or Pinterest or created internet connected devices powered by AWS such as Alexa and Dropcam. Many app developers have been intrigued by the magic of Alexa and other AI powered products they see being offered or used by Amazon and want our help in developing their own magical apps that can hear, see, speak, and understand the world around them.

For example, they want us to help them develop chatbots that understand natural language, build Alexa-style conversational experiences for mobile apps, dynamically generate speech without using expensive voice actors, and recognize concepts and faces in images without requiring human annotators. However, until now, very few developers have been able to build, deploy, and broadly scale applications with AI capabilities because doing so required specialized expertise (with Ph.D.s in ML and neural networks) and access to vast amounts of data. Effectively applying AI involves extensive manual effort to develop and tune many different types of machine learning and deep learning algorithms (e.g. automatic speech recognition, natural language understanding, image classification), collect and clean the training data, and train and tune the machine learning models. And this process must be repeated for every object, face, voice, and language feature in an application.

Today, I am excited to announce that we are launching three new Amazon AI services that eliminate all of this heavy lifting, making AI broadly accessible to all app developers by offering Amazon's powerful and proven deep learning algorithms and technologies as fully managed services that any developer can access through an API call or a few clicks in the AWS Management Console. These services are Amazon Lex, Amazon Polly, and Amazon Rekognition that will help AWS app developers build these next generation of magical, intelligent apps. Amazon AI services make the full power of Amazon's natural language understanding, speech recognition, text-to-speech, and image analysis technologies available at any scale, for any app, on any device, anywhere.

Amazon Lex

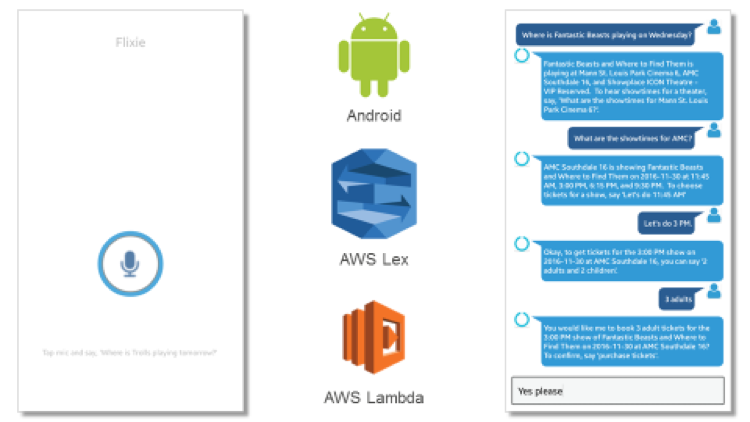

After the launch of the Alexa Skill Kit (ASK), customers loved the ability to build voice bots or skills for Alexa. They also started asking us to give them access to the technology that powers Alexa, so that they can add a conversational interface (using voice or text) to their mobile apps. They also wanted the capability to publish their bots on chat services like Facebook Messenger and Slack.

Amazon Lex is a new service for building conversational interfaces using voice and text. The same conversational engine that powers Alexa is now available to any developer, making it easy to bring sophisticated, natural language 'chatbots' to new and existing applications. The power of Alexa in the hands of every developer, without having to know deep learning technologies like speech recognition, has the potential of sparking innovation in entirely new categories of products and services. Developers can now build powerful conversational interfaces quickly and easily, that operate at any scale, on any device.

The speech recognition and natural language understanding technology behind Amazon Lex and Alexa is powered by deep learning models that have been trained on massive amounts of data. Developers can simply specify a few sample phrases and the information required to complete a user's task, and Lex builds the deep learning based intent model, guides the conversation, and executes the business logic using AWS Lambda. Developers can build, test, and deploy chatbots directly from the AWS Management Console. These chatbots can be accessed anywhere: from web applications, chat and messenger apps such as Facebook Messenger (with support for exporting to Alexa Skills Kit and Slack support coming soon), or connected devices. Developers can also effortlessly include their Amazon Lex bots in their own iOS and Android mobile apps using the new Conversational Bots feature in AWS Mobile Hub.

Recently, a few selected customers participated in a private beta of Amazon Lex. They provided us with valuable feedback as we rounded off Amazon Lex for a preview launch. I am excited to share some of the feedback from our beta customers HubSpot and Capital One.

HubSpot, a marketing and sales software leader, uses a chatbot called GrowthBot to help marketers and sales personnel be more productive by providing access to relevant data and services. Dharmesh Shah, HubSpot CTO and Founder, tells us that Amazon Lex enabled sophisticated natural language processing capabilities on GrowthBot to provide a more intuitive UI for customers. Hubspot could take advantage of advanced AI and ML capabilities provided by Amazon Lex, without having to code the algorithms.

Capital One offers a broad spectrum of financial products and services to consumers, small businesses, and commercial clients through a variety of channels. Firoze Lafeer, CTO Capital One Labs, tells us that Amazon Lex enables customers to query for information through voice or text in natural language and derive key insights into their accounts. Because Amazon Lex is powered by Alexa's technology, it provides Capital One with a high level of confidence that customer interactions are accurate, allowing easy deployment and scaling of bots.

Amazon Polly

The concept of a computer being able to speak with a human-like voice goes back almost as long as ENIAC (the first electronic programmable computer). The concept has been explored by many popular science fiction movies and TV shows, such as "2001: A Space Odyssey" with HAL-9000 or the Star Trek computer and Commander Data, which defined the perception of computer-generated speech.

Text-to-speech (TTS) systems have been largely adopted in a variety of real-life scenarios such as telephony systems with automated speech responses or help for visually or speech-impaired people. Prof. Stephen Hawking's voice is probably the most famous example of synthetic speech used to help the disabled.

TTS systems have continuously evolved through the last few decades and are nowadays capable of delivering a fairly natural-sounding speech. Today, TTS is used in a large variety of use cases and is turning into a ubiquitous element of user interfaces. Alexa and its TTS voice is yet another step towards building an intuitive and natural language interface that follows the pattern of human communication.

With Amazon Polly, we are making the same TTS technology used to build Alexa's voice to AWS customers. It is now available to any developer aiming to power their apps with high-quality spoken output.

In order to mimic human speech, we needed to address a variety of challenges. We needed to learn how to interpret various text structures such as acronyms, abbreviations, numbers, or homographs (words spelled the same but pronounced differently and having different meanings). For example:

_I heard that Outlander is a good read, though I haven’t read it yet_r

or

_St. Mary's Church is at 226 St. Mary's_ St._

Last but not least, as the quality of TTS gets better and better, we expect a natural intonation matching the semantics of synthesized texts. Traditional rule-based models and ML techniques, such as classification and regression trees (CART) and hidden Markov models (HMM) present limitations to model the complexity of this process. Deep learning has shown its capacity in representing complex and nonlinear relationships at different levels of speech synthesis process. The TTS technology behind Amazon Polly takes advantage of bidirectional long short-term memory (LSTM) networks using a massive amount of data to train models that convert letters to sounds and predict the intonation contour. This technology enables high naturalness, consistent intonation, and accurate processing of texts.

Amazon Polly customers have confirmed the high quality of generated speech for their use cases. Duolingo uses Amazon Polly voices for language learning applications, where quality is critical. Severin Hacker, the CTO of Duolingo, acknowledged that Amazon Polly voices are not just high in quality, but are as good as natural human speech for teaching a language.

The Royal National Institute of Blind People uses the Amazon TTS technology to support the visually impaired through their largest library of books in the UK. John Worsfold, Solutions Implementation Manager at RNIB, confirmed that Amazon Polly's incredibly lifelike voices captivate and engage RNIB readers.

Amazon Rekognition

We live in a world that is undergoing digital transformation at a rapid rate. One key outcome of this is the explosive growth of images generated and consumed by applications and services across different segments and industries. Whether it is a consumer app for photo sharing or printing, or the organization of images in the archives of media and news organizations, or filtering images for public safety and security, the need to derive insight from the visual content of the images continues to grow rapidly.

There is an inherent gap between the number of images created and stored, and the ability to capture the insight that can be derived from these images. Put simply, most image stores are not searchable, organized, or actionable. While a few solutions exist, customers have told us that they don't scale well, are not reliable, are too expensive, rely on complex pipelines to annotate, verify, and process massive amount of data for training and testing algorithms, need a team of highly specialized and skilled data scientists, and require costly and highly specialized hardware. For companies that have successfully built a pipeline for image analysis, the processes of maintaining, improving, and keeping up with the research in this space proves to be high friction. Amazon Rekognition solves these problems.

Amazon Rekognition is a fully managed, deep-learning–based image analysis service, built by our computer vision scientists with the same proven technology that has already analyzed billions of images daily on Amazon Prime Photos. Amazon Rekognition democratizes the application of deep learning technique for detecting objects, scenes, concepts, and faces in your images, comparing faces between two images, and performing search functionality across millions of facial feature vectors that your business can store with Amazon Rekognition. Amazon Rekognition's easy-to-use API, which is integrated with Amazon S3 and AWS Lambda, brings deep learning to your object store.

Getting started with Rekognition is simple. Let's walk through some of the core features of Rekognition that help you build powerful search, filter, organization, and verification applications for images.

Object and scene detection

Given an image, Amazon Rekognition detects objects, scenes, and concepts and then generates labels, each with a confidence score. Businesses can use this metadata to create searchable indexes for social sharing and printing apps, categorization for news and media image archives, or filters for targeted advertisement. If you are uploading your images to Amazon S3, it is easy to invoke an AWS Lambda function that passes the image to Amazon Rekognition and persist the labels with confidence scores into an Elasticsearch index.

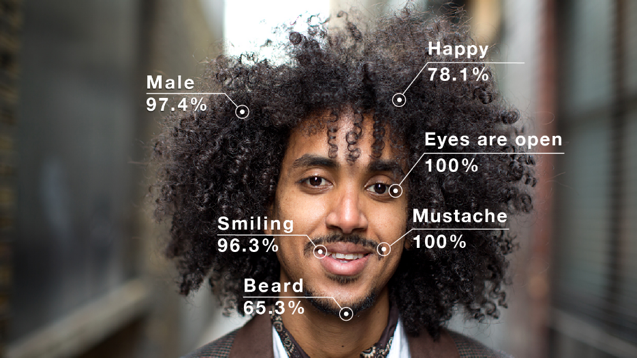

Facial Analysis

With any given image, you can now detect faces present, and derive face attributes like demographic information, sentiment, and key landmarks from the face. With this fast and accurate API, retail businesses can respond to their customers online or in store immediately by delivering targeted ads. Also, these attributes can be stored in Amazon Redshift to generate deeper insights of their customers.

Face recognition

Amazon Rekognition's face comparison and face search features can provide businesses with face-based authentication, verification of identity, and the ability to detect the presence of a specific person in a collection of images. Whether simply comparing faces present in two images using CompareFaces API, or creating a collection of faces by invoking Amazon Rekognition's IndexFace API, businesses can rely on our focus on security and privacy, as no images are stored by Rekognition. Each detected face is transformed into an irreversible vector representation, and this feature vector (and not the underlying image itself) is used for comparison and search.

I am pleased to share some of the positive feedbacks from our beta customers.

Redfin is a full-service brokerage that uses modern technology to help people buy and sell houses. Yong Huang, Director of Big Data & Analytics, Redfin, tell us that Redfin users love to browse images of properties on their site and mobile apps and they want to make it easier for their users to sift through hundreds of millions of listing and images. He also added that Amazon Rekognition generates a rich set of tags directly from images of properties. This makes it relatively simple for them to build a smart search feature that helps customers discover houses based on their specific needs. And, because Amazon Rekognition accepts Amazon S3 URLs, it is a huge time-saver for them to detect objects, scenes, and faces without having to move images around.

Summing it all up

We are in the early days of machine learning and artificial intelligence. As we say in Amazon, we are still in Day 1. Yet, we are already seeing the tremendous value and magical experience Amazon AI can bring to everyday apps. We want to enable all types of developers to build intelligence in to their applications. For data scientists, they can use our P2 instances, Amazon EMR Spark MLLib, deep learning AMIs, MxNet and Amazon ML to build their own ML models. For app developers, we believe that these three Amazon AI services enable them to build next-generation apps to hear, see, and speak with humans and the world around us.

We'll also be hosting a Machine Learning " State of the Union" that covers all the three new AmazonAI services announced today along with demos from Motorola Solutions and Ohio Health – head over to Mirage (as we added more seating!). Also, we have a series of breakout sessions on using MXNet at AWS re:Invent on November 30th at the Mirage Hotel in Las Vegas.