Using the Cloud to build highly-efficient systems

These are times where many companies are focusing on the basics of their IT operations and are asking themselves how they can operate more efficiently to make sure that every dollar is spent wisely. This is not the first time that we have gone through this cycle, but this time there are tools available to CIOs and CTOs that help them to manage their IT budgets very differently. By using infrastructure as a service, basic IT costs are moved from a capital expense to a variable cost, building clearer relationships between expenditures and revenue generating activities. CFOs are especially excited about the premise of this shift.

In recent weeks in my discussions with many of our Amazon Web Services customers I have seen a heightened interest in moving functionality into the AWS cloud to get a better grasp on controlling cost. And this is across the board; from young businesses to Fortune 500 enterprises, from research labs to television networks, all are concerned about reducing upfront cost associated with the new ventures and reducing waste in existing operations. Most of them point to 3 properties of the Amazon Web Services model that helps them become more efficient:

The pay-as-you-go model. There are significant advantages to this model for efficiency as one only pays for those resources one has actually consumed. If the application scales along the right revenue generating dimensions these costs will be in line with the revenue being generated.

Managing peak capacity. Many IT organizations need to maintain extra capacity for anticipated peak loads, capacity that sits idle for most of the time. These peak loads can be driven by customer demand such as in the online world, but it can also be capacity required to execute essential IT tasks such as periodic document indexing or business tasks such as closing the books at the end of a quarter. This is often the first step that our enterprise customers take to become familiar with using infrastructure as a service. After successfully running some of their peaks jobs they will then starting moving more permanent processing into the cloud.

A great example in the online world is the Indy 500 organization that normally runs 50 servers to serve their customers, but during the races move all of their processing into Amazon EC2 to handle all traffic no matter how many hundreds of thousands of customers show up at the same time. The savings for the Indy IT budget during the races this spring was over 50%.

Higher reliability at lower cost. Negotiating several contracts with different datacenter and network providers to make sure the IT tasks can survive complex failure scenarios is a difficult task and many organizations find it hard to achieve this in a cost efficient manner. Amazon EC2 with its Regions and Availability Zones gives its customers access to several high-end datacenters with highly redundant networking capabilities at a single pricing model, without any negotiations.

Amazon's efficiency principles

At Amazon we have a long history of implementing our services in a highly efficient manner. Whether these are our infrastructure services or our high-level ecommerce services, frugality is essential in our retail business. Margins in a retail business are traditionally small and these constraints have driven major innovations in the way that we manage our IT capacity. We have developed a lot of expertise in building highly efficient architectures to support Amazon's goal of providing our customer with products at low prices. Every savings we have been able to make in our IT cost we have been able to give back to our customers in terms of lowering prices. This tradition of letting customers benefit from our cost saving is something that we also apply to our Amazon Web Services business. When earlier this year we were able to negotiate better deals with our network providers we immediately reduced the bandwidth cost for our customers.

But we have learned at Amazon that having a low cost infrastructure is only the starting point of being as efficient as possible. You need to make sure that your applications will make use of the infrastructure in an adaptive and scalable manner to achieve a high degree of efficiency. In the Amazon architecture being incrementally scalable is key. This means that services' and applications' main course of action to handle increasing load or larger datasets is to grow one unit at a time. A more precise definition can be found here.

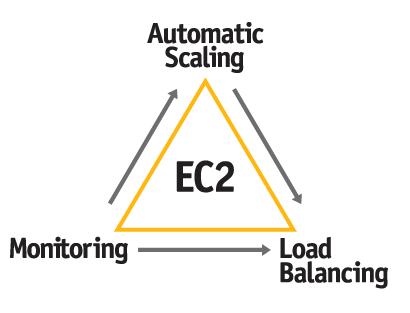

All services at Amazon are built to be horizontally scalable. An efficient request routing mechanism delivers requests to services in a manner that optimizes performance at a certain efficiency point. Capacity is acquired and released on short time frames to handle increase and decreases in resource usage. To achieve this principle of automatic scaling our services there are four basic components that need to work together:

Elastic Compute Capacity. The basic resources required to execute our services and applications need to be able to grow and shrink at a moment's notice in a fully automated fashion. This is the fundamental premise behind Amazon EC2; whenever an Amazon service requires additional capacity it can use a simple API call to acquire additional capacity without any interference from operators or data techs, and can release it when no longer needed.

Monitoring. We relentlessly measure every possible resource usage parameter, every application counter, and every customer's experience. Many gigabits per second of monitoring data flows continuously through the Amazon networks to make sure that our customers are getting serviced at the levels they can expect and at an efficiency level the business desires. We don't really care that much about averages or medians, for us performance at the 99.9 percentile is important to make sure that all our customers get the right experience.

Load balancing. Using the monitoring information we route requests intelligently, using several algorithms, to those services instances that can provide responses with the expected performance. In reality balancing the load is a secondary task of the request routing system as it is the customer's experience we are most driven by. The optimization quest is to deliver the right customer experience at the optimal resource utilization.

Automatic scaling. Using Monitoring data, Load balancing and EC2, the auto-scaling service monitors service health and performance, brings more capacity on-line if needed or reduces the number of instances to meet efficiency goals. It spreads instances over multiple availability zones and regions to achieve the desired reliability guarantees. All without interference of developers or operators.

These four services are the core of Amazon's highly efficient infrastructure that has allowed us to drive our IT costs to the floor for our retail operations.

Building highly-efficient systems on AWS

To make sure our customers can also benefit from our experience in building highly efficient systems we have decided to release versions of these services on the Amazon Web Services platform. The Monitoring, Load Balancing and Auto-Scaling services will be combined with a Management Console that provides a simple, point-and-click web interface that lets you configure, manage and access your AWS cloud resources.

They will first be released in private beta and you can express your interest in that program on the AWS web site. More details can be found in the posting on the Amazon Web Services blog.

Graphic by Renato Valdés Olmos of Postmachina